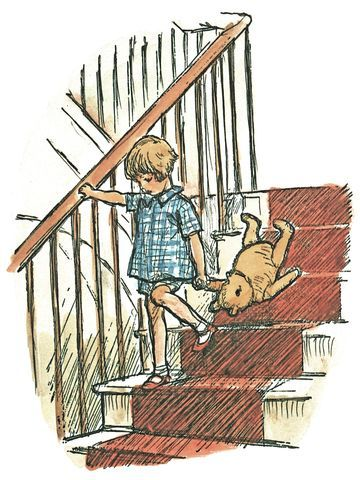

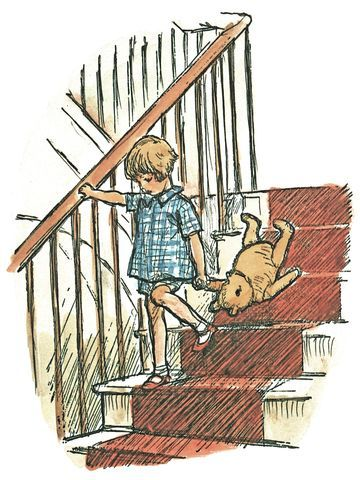

The tl;dr of this text is that I spend too much time and energy managing file outputs from computational workflows. I feel like Winnie-the-Pooh being dragged down the stairs, “there must be a better way; if only he could stop bumping for a moment to think of it.”

In the ramblings that follow I demonstrate a common source of file naming issues and try to show how it relates to popular workflow tools from GNU Make via Luigi to CWL and WDL. I distinguish two categories of tools: Those where all outputs share the same namespace, and those where they don't. The latter are potentially much more useful because they enable more automation of file naming.

How the typical problem arises

In my research I often find myself in the following situation. I have produced a bunch of Python scripts or specialized command-line tools to transform some input files to an output file. The relevant part may look like this:

indata = load_data()

outdata = do_analysis(indata)

fig = generate_fig(outdata)

fig.savefig('figure.png')

Note that the implementation is packed with magic constants:

- The

load_data()function takes no parameters so it must contain some file paths or similar. do_analysis(indata)may also have hard-coded parameters to algorithms, etc.figure.pngis a great name for a figure, as long as you only have one...

But I am not worried about any of this, because my wonderful script produces the file figure.png which looks great, and by the way it's Friday afternoon and we have more important things to do than clean up this code.

Then it starts growing

So far so good. The problem arises on Monday morning when I return to work and realize a problem with last Friday's decision on the parameter value k = 3, hidden deep inside do_analysis(). I need to check what happens with k = 4. I am not stupid, so I realize I will want to play with different values of k and I separate it out as a function argument. The revised script:

k = 4

indata = load_data()

outdata = do_analysis(indata)

fig = generate_fig(outdata, k)

fig.savefig(f'figure-k_{k}.png')

OK, excellent, I re-run the script and look at my new figure-k_4.png which looks even more interesting. Maybe I should try this for a few more values, and by the way, I also want to dump the outdata to a file for later inspection.

ks = [3, 4, 7, 26]

indata = load_data()

outdata = do_analysis(indata)

outdata.to_hdf(f'outdata.h5', 'df')

for k in ks:

fig = generate_fig(outdata, k)

fig.savefig(f'figure-k_{k}.png')

Nice! Now another issue is that I want to switch out part of the indata, so I change the script a bit more and also organize the outputs into different directories:

# Parameter sets

ks = [3, 4, 7, 26]

eurostat_tables = {

'apro_cpshr': '2019-04-12 23:00:00',

'apro_cpnhr_h': '2019-03-21 23:00:00',

}

# Actual work

common_data = load_data()

for name, version in eurostat_tables.items():

outdir = f'{name}-{version}'

os.makedirs(outdir, exist_ok=True)

eurostat_data = eust.read_table_data(name, version)

outdata = do_analysis(common_data, eurostat_data)

outdata.to_hdf(os.path.join(outdir, 'outdata.h5'), 'df')

for k in ks:

fig = generate_fig(outdata, k)

fig.savefig(os.path.join(outdir, f'figure-k_{k}.png'))

Where it always ends up

OK, so you see where this is going. The list of parameters grows, and the naming schemes for the directories and files gets ever more complicated. One parameter added to the soup, another removed. Soon enough I have created myself a Makefile (see real-world example here) to keep track of all the outputs.

Note the os.makedirs(outdir, exist_ok=True) above. I'm not sure how many times I have had a 10-minute calculation crash because of a FileNotFoundError or a FileExistsError raised when dumping the outdata.

And let's not even talk about the naming schemes of the cache files I start to keep for some of the intermediate operations. Somehow these projects always evolve into a living hell of subdirectory creation, encoding of parameter values in file names, and manual cache invalidation. Oh, the cache invalidation.

Some days I literally think I might go crazy.

Looking closer: What is the problem really?

As a result I have spent a disturbing amount of time thinking of solutions to the General Problem of running computational workflows and managing the outputs. But before diving into the solutions part, I want to elaborate a little bit on the problem.

Thinking ahead is not an option

One important insight for me is that these exploratory data analysis tasks by nature grow in hard-to-predict ways. Separating out all the possible parameters from the start and naming the file outputs accordingly is not realistic since I do not even know what they are. I could just as well have realized that the parameter k was not important, but maybe sigma_x is? So I constantly change the scripts to accommodate the most recent idea.

Thus, there is no Grand Solution to the issue of changing specifications. The changing definition of the problem is part of the process. The set of parameter values will change: should I sweep k or sigma_x or both? The plumbing between these operations will change: should I do this data cleaning operation before or after the data subset selection?

A dependency graph of side effects

Another part of the problem, and maybe of the solution as we shall see, is that data analysis tools rely heavily on side effects (files, in this case), while the overall structure of the workflow can be modelled in a more abstract, functional way.

File outputs are essential to connect different tools like Python, R, bash, and various command line tools. They are also useful because we can inspect and visualize outputs using Excel, SQLite browser, image viewers, etc. These tools are and will remain the core of laptop-scale data processing for the forseeable future.

By contrast, the plumbing between tasks lends itself very well to more abstract, functional thinking: the output set y is obtained by mapping function f over the input set x, the output z is an aggregation of the whole set x, etc. It is natural to see a data analysis workflow as a directed acyclical graph of operations. Although each one of those steps is actually a mess of imperative programs, side effects in file systems, etc, the overarching structure is easiest to express in functional terms.

Let's look again at the example but without all the file handling stuff:

common_data = load_data()

for name, version in eurostat_tables.items():

eurostat_data = eust.read_table_data(name, version)

outdata = do_analysis(common_data, eurostat_data)

for k in ks:

fig = generate_fig(outdata, k)

Forget for a moment that these are nested loops, and see them more abstractly as nested mappings of operations:

common_datais defined at the root. There is only one of it.eurostat_dataandoutdataboth have one item for each entry ineurostat_tables. They are are mappings from the set of items ineurostat_tables.figis nested belowoutdata. It can be seen as a mapping from the product (eurostat_tables×ks), or if you prefer as a sort of two-dimensional object, a set of sets of output files.

(By the way, what I have not shown here is that many workflows involve aggregating functions, i.e., operations that reduce the dimension of something. In some cases these aggregations can be formulated as fold operations, but they are often more general, i.e., potentially require access to the whole set of inputs. This is an important part of the graph structure too, but let's leave it for now.)

A main reason I am constantly dealing with file naming issues is that the tasks are nested inside one another. In the first step of the example above we went from having one figure.png. But very soon we had to produce a whole range of similar figures named {name}-{version}/figure-k_{k}.png. The clean and beautiful world of workflow graphs collides with the ugly truth of side effects like output files.

Side note: Drawing the line between tasks and the graph structure

This section is somewhat a side-note, but I find it relevant to elaborate a little on this proposed dichotomy.

I'm not proposing there is a strict or clear line to be drawn between the imperative-style operations and the functional-style structure of a computational workflow. My point is that in every data analysis project I have ever done, it has been natural to think of some operations as atomic, i.e., as isolated and irreducible in some sense, for example:

- Join these two tables and dump to a csv file.

- Download this URL and save to a certain file name.

- Calculate the mean pixel value of this GIS raster dataset in each of these geographic regions.

I am not even going to try to define what makes an operation “atomic”. I don't think there is a useful definition.

But many seem to share the intuition that some operations can and should be bundled into logical units of work. Just look at the examples from some popular workflow tools (CWL, WDL, Apache Airflow, Luigi, Snakemake, doit, and many others). They all have some notion of a Task as the fundamental unit of work. A Task can encapsulate more or less any program execution, while the encapsulating workflow languages typically only allow directed acyclic graphs.

The solution: namespaces for files (a.k.a directories)

Therefore, I think the solution is to provide a nice and simple way to do the plumbing between Python functions, R scripts, bash scripts, whatever, that provides some abstraction from the awful truth that intermediate file outputs have to be stored somewhere.

To sum up the ramblings so far, I have concluded three things:

- Exploratory data analysis workflows tend to consist of “atomic” operations that create files with intermediate and final results. Since the interconnections between these operations change all the time, the output file names have to be renamed too.

- In particular, much of the naming issues arise when nesting/mapping operations over sets of input data files, parameters, etc. In the end, I am often interested in output file sets that are best defined as a mapping from some set of inputs. Therefore it is very useful if file outputs of such mappings or nested operations can be automatically named in a meaningful way.

- Workflow tools like GNU Make and its many descendants (pun intended) are a natural and useful choice to define the plumbing between separate tasks.

My two proposals are therefore the following:

- Let's use workflow systems to abstract away as much as possible of the renaming of files that is needed to connect different tasks to each other.

- Let nesting/mapping operations be explicitly represented in workflow languages. Then a useful naming of output files can be automated and our limited time and mental capacity be directed to more interesting problems than naming files.

GNU Make and siblings use a single namespace

GNU Make is useful for automating workflows. However, it is not useful for automating workflows that are deeply nested and rapidly changing. Since all the targets are defined in the same namespace (the same output directory, concretely), they have to be uniquely named, and this has to be done manually. As far as I understand, the same goes for popular tools like Luigi, snakemake, and doit (but please correct me if I am wrong).

I still use GNU Make though, mainly because it is so clear and direct, easy to get started with, and because it runs really fast.

WDL creates separate namespaces

In contrast, the workflow languages CWL and WDL (and please let me know about others) define a new namespace for each task, so that the separate tasks become more like functions. To illustrate, in Python I write

def f(x, y):

return x + y

a = load_data()

b = load_data()

c = f(a, b)

When f is called here, the arguments are assigned the (temporary) names x and y and inside the function body that is all we need. The function f will never know that a and b were loaded from the same function. In a similar way, a WDL task is like a function that also abstracts away input file names, something like this:

task load_data {

command { echo foo > bar}

output { File out = "bar" }

}

task f {

File x

File y

command { cat ${x} ${y} > temp }

output { File z = "temp" }

}

workflow w {

call load_data as a

call load_data as b

call f { input: x=a.out, y=b.out }

}

The important point here is that the WDL workflow is executed using a temporary working directory for each task call and substituting the file name placeholders x and y for whatever temporary file names seem suitable. The task load_data concretely created a file output called bar, but this will never be known to the task f.

WDL can also do a lot of other cool things that I have not mentioned here. I suggest you check it out.

(CWL is in principle also a cool project, but in practice I find its YAML-based syntax to be clunky and hard to read. By comparison, WDL is exquisitely readable and writeable considering how much power the language has.)

Why I'm not using WDL

Almost only for one reason: It is slow. WDL is designed to run big bioinformatics pipelines on clusters, and therefore the execution engine Cromwell has not been optimized to run small laptop-scale workflows fast. Executing the workflow above with the latest Cromwell engine takes about 30 seconds on my laptop. By comparison, I timed the Makefile execution to 6 milliseconds ;)

Final words

If you read through all these ramblings I hope you found it interesting. I wrote it mostly to clarify my own thinking. If you had the patience to read and you found it interesting, then probably I would find it interesting to talk to you about file names, or whatever. Pop me an email on workflows [at] rasmuseinarsson.se.

If there was a lightweight execution engine for local laptop-scale jobs I would definitely use WDL, because I think they have done many things right (not just the file naming business). I have been close enough to try to build a simple execution engine myself.

Sometimes I also put a day or two into hacking on another tool with a friend (we'll let you know if it gets anywhere near useful).

Now I spent way too much complaining about all this. I have to get back to renaming file outputs.

“Here is Edward Bear, coming downstairs now, bump, bump, bump, on the back of his head, behind Christopher Robin. It is, as far as he knows, the only way of coming downstairs, but sometimes he feels that there really is another way, if only he could stop bumping for a moment and think of it. And then he feels that perhaps there isn't.”